After my previous success in getting the SYN6288, a Chinese text-to-speech IC, to produce satisfactory Chinese speech and pronouncing synthetic English characters, I purchased the LD3320, another Chinese voice module providing speech recognition as well as MP3 playback capabilities.

The module's Chinese voice recognition mechanism can be initialized with the Pinyin transliterations of the Chinese text to be recognized. The module will then listen to the audio sent to its input channel (either from a microphone or from the line-in input) to identify any voice that resembles the programmed list of Chinese words sent during initialization. Audio during MP3 playback is sent via the headphone/lineout (stereo) and speaker (mono) pins. Data communication with the module is done using either a proprietary parallel protocol or SPI.

The board I purchased comes with a condenser microphone and 2.54mm connection headers for easy prototyping:

Board Schematics

The detailed schematics of the board is below:

The connection headers on the breakout board expose several useful pins, namely VDD, GND, parallel/SPI communication lines and audio input/output pins. The detailed pin description can be found below, where ^ denotes an active low signal:

VDD 3.3V Supply

GND Ground

RST^ Reset Signal

MD Low for parallel mode, high for serial mode.

INTB^ Interrupt output signal

A0 Address or data selection for parallel mode. If high, P0-P7 indicates address, low for data.

CLK Clock input for LD3320 (2-34 MHz).

RDB^ Read control signal for parallel input mode

CSB^/SCS^ Chip select signal (parallel mode) / SPI chip select signal (serial mode).

WRB^/SPIS^ Write Enable (parallel input mode) / Connect to GND in serial mode

P0 Data bit 0 for parallel input mode / SDI pin in serial mode

P1 Data bit 1 for parallel input mode / SDO pin in serial mode

P2 Data bit 2 for parallel input mode / SDCK pin in serial mode

P3 Data bit 3 for parallel input mode

P4 Data bit 4 for parallel input mode

P5 Data bit 5 for parallel input mode

P6 Data bit 6 for parallel input mode

P7 Data bit 7 for parallel input mode

MBS Microphone Bias

MONO Mono Line In

LINL/LINR Stereo LineIn (Left/Right)

HPOL/HPOR Headphone Output (Left/Right)

LOUL/LOUTR Line Out (Left/Right)

MICP/MICN Microphone Input (Pos/Neg)

SPOP/SPON Speaker Ouput (Pos/Neg)

The LD3320 requires an external clock to be fed to pin CLK, which is already provided by the breakout board via a 22.1184 MHz crystal. No external components are needed, even for the audio input/output lines, as the breakout board already contains all the required parts.

To use SPI for communication, connect MD to VDD, WRB^/SPIS^ to GND and use pins P0, P1 and P2 for SDI, SDO and SDCK respectively. For simplicity, the rest of this article will use SPI to communicate with this module.

Official documentation (in Chinese only) can be found on icroute's website. The Chinese datasheet can be downloaded here. With the help of onlinedoctranslator, I made an English translation, which can be downloaded here.

Breakout board issues

Before you proceed to explore the LD3320, please be aware of possible PCB issues causing wrong signals to be fed to the IC and resulting in precious time wasted debugging the circuit. In my case, after getting the sample program to compile and run on my PIC microcontroller only to find out that it did not work, I spent almost a day checking various connections and initialization codes to no avail. I could easily have debugged till the end of time and still could not get it to work if I hadn't noticed by chance a 22.1184 MHz sine wave on the pin marked as WRB, raising suspicion that the PCB trace may have issues.

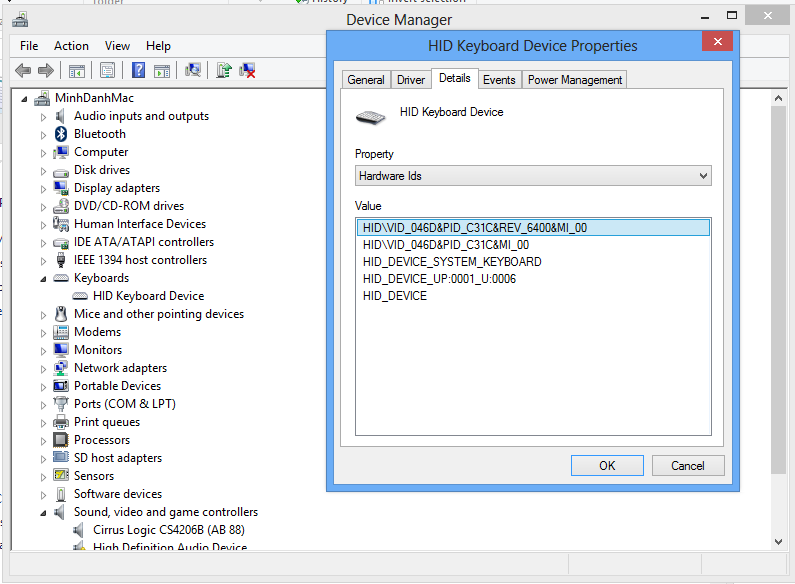

I decided to use a multimeter and cross-check the connections between the labelled pins on the connection headers and the actual pins on the IC while referring to the LD3320 pin configuration described in the datasheet:

This is the pin description printed on the connection header at the back of the board:

For the right and bottom connection headers, the labelling is correct. However, further tests showed that the condenser microphone is connected in reverse polarity and that there are several other connection issues between the microphone and the LD3320. The connections on the PCB did not seem to match the board schematics, which could indicate a faulty PCB or a mismatched schematics. Either way, the microphone input still could not work even with the ECM replaced, and I could only get it to work using the line-in input (more on that later) after removing the ECM from the board. The presence of the microphone, even if unused, will disturb the line-in input channel and prevent the module from working.

Therefore, before you apply power to the board, check to make sure that the pin labelling is correct - or at least check that the VDD and GND pins are correctly labelled. Also, your board may not have any issue or have a different issue than those described above.

Speech recognition

The only few examples I found for this IC are from coocox's LD3320 driver and some 8051 codes downloadable from here. By comparing the codes with the initialization protocol provided in the datasheet, the steps to use this module can be summarized below:

1. Reset the module by pulling the RST pin low, and then high for a short while.

2. Initialize the module for ASR (Automatic Speech Recognition) mode. In particular, set the input channel to be used for speech recognition.

3. Initialize the list of Chinese words to be recognized. For each Chinese word, send the Pinyin transliteration of the word (without tone marks) in ASCII (e.g. bei jing for 北京) and an associated code (a number between 1 and 255) to identify this word. The codes for the words in the list need not be continuous and multiple words can have the same identification code.

4. Look for an interrupt on the INTB pin, which will trigger when a voice has been detected on the input channel.

5. When the interrupt happens, instruct the LD3320 to perform speech recognition, which will analyse the detected voice for any patterns similar to the list of Chinese words programmed in step 3. If a match is found, the chip will return the identification code associated with the word.

6. After a speech recognition task is completed, go back to step 1 to be ready for another recognition task.

To specify which input channel will be used for speech recognition, use register 0x1C (ADC Switch Control). Write 0x0B for microphone input (MICP/MIN pins), 0x07 for stereo input (LINL/LINR pins) and 0x23 for mono input (MONO pins).

In my tests, as the microphone input channel cannot be used due to the PCB issues mentioned above, I used the stereo input channels with an ECM and a preamplifier circuit based on a single NPN transistor. The output of this circuit is then connected to the LINL/LINR audio input pins of the LD3320. Below is the diagram of the preamplifier:

To achieve the highest recognition quality possible, several registers of the LD3320 are used to adjust the sensitivity and selectivity of the recognition process:

The LD3320 also supports playback of MP3 data received via SPI. Playback is done using the following steps:

1. Reset and initialize the LD3320 in MP3 mode.

2. Set the correct audio output channel for audio playback.

3. Send the first segment of the MP3 data to be played.

4. Check if the MP3 has finished playing. If so, stop playback.

5. If not, continue to send more MP3 data and go back to step 4.

Three types of audio output are supported: headphone (stereo), line out (stereo), or speaker (mono). The headphone and speaker channels are always enabled whereas the speaker channel must be enabled independently. Line out and headphone output volume can be adjusted by writing a value to bits 5-1 of registers 0x81 and 0x83 respectively, with 0x00 indicating maximum volume. Speaker output volume can be changed by writing to bits 5-2 of register 0x83, with 0x00 indicating maximum volume.

According to the datasheet, the speaker output line can support an 8-ohm speaker. However, in my tests, connecting an 8-ohm speaker to the speaker output will cause the module to stop playback unexpectedly, presumably due to high power consumption, although the sound quality through the speaker remains clear. The headphone and line out channels seem to be stable and deliver good quality audio.

I also tried to connect a PAM8403 audio amplifier to the line-out channel to achieve a stereo output using two 8-ohm speakers. At first, with the PAM8403 sharing the same power and ground lines with the LD3320, the same issue of unexpected playback termination persisted, even with the usage of decoupling capacitors. Suspecting the issue may be due to disturbance caused by the 8-ohm speaker sharing the same power lines, I used a different power supply for the PAM8403 and the LD3320 managed to play MP3 audio smoothly with no other issues.

Demo video

I made a video showing the module working with a PIC microcontroller and an ST7735 128x160 16-bit color LCD to display the speech recognition results. It shows the results of the module trying to recognize proper names in Chinese(bei jing北京, shang hai上海, hong kong香港, chong qing重庆, tian an men天安门) and other words such as a li ba ba. A single beep means that the speech is recognized while a double beep indicates unrecognized speech. Although the speech recognition quality highly depends on the input audio, volume level and other environmental conditions, overall the detection sensitivity and selectivity seems satisfactory as can be seen from the video.

The end of the video shows the stereo playback of an MP3 song stored on the SD card - using a PAM8403 amplifier whose output is fed into two 8-ohm speakers. Notwithstanding the background noises presumably due to effects of breadboard stray capacitance at high frequency (22.1184 MHz for this module), MP3 playback quality seems reasonably good and comparable to the VS1053 module.

The entire MPLAB X demo project for this module can be downloaded here.

See also

SYN6288 Chinese Speech Synthesis Module

Interfacing VS1053 audio encoder/decoder module with PIC using SPI

The module's Chinese voice recognition mechanism can be initialized with the Pinyin transliterations of the Chinese text to be recognized. The module will then listen to the audio sent to its input channel (either from a microphone or from the line-in input) to identify any voice that resembles the programmed list of Chinese words sent during initialization. Audio during MP3 playback is sent via the headphone/lineout (stereo) and speaker (mono) pins. Data communication with the module is done using either a proprietary parallel protocol or SPI.

The board I purchased comes with a condenser microphone and 2.54mm connection headers for easy prototyping:

Board Schematics

The detailed schematics of the board is below:

The connection headers on the breakout board expose several useful pins, namely VDD, GND, parallel/SPI communication lines and audio input/output pins. The detailed pin description can be found below, where ^ denotes an active low signal:

VDD 3.3V Supply

GND Ground

RST^ Reset Signal

MD Low for parallel mode, high for serial mode.

INTB^ Interrupt output signal

A0 Address or data selection for parallel mode. If high, P0-P7 indicates address, low for data.

CLK Clock input for LD3320 (2-34 MHz).

RDB^ Read control signal for parallel input mode

CSB^/SCS^ Chip select signal (parallel mode) / SPI chip select signal (serial mode).

WRB^/SPIS^ Write Enable (parallel input mode) / Connect to GND in serial mode

P0 Data bit 0 for parallel input mode / SDI pin in serial mode

P1 Data bit 1 for parallel input mode / SDO pin in serial mode

P2 Data bit 2 for parallel input mode / SDCK pin in serial mode

P3 Data bit 3 for parallel input mode

P4 Data bit 4 for parallel input mode

P5 Data bit 5 for parallel input mode

P6 Data bit 6 for parallel input mode

P7 Data bit 7 for parallel input mode

MBS Microphone Bias

MONO Mono Line In

LINL/LINR Stereo LineIn (Left/Right)

HPOL/HPOR Headphone Output (Left/Right)

LOUL/LOUTR Line Out (Left/Right)

MICP/MICN Microphone Input (Pos/Neg)

SPOP/SPON Speaker Ouput (Pos/Neg)

The LD3320 requires an external clock to be fed to pin CLK, which is already provided by the breakout board via a 22.1184 MHz crystal. No external components are needed, even for the audio input/output lines, as the breakout board already contains all the required parts.

To use SPI for communication, connect MD to VDD, WRB^/SPIS^ to GND and use pins P0, P1 and P2 for SDI, SDO and SDCK respectively. For simplicity, the rest of this article will use SPI to communicate with this module.

Official documentation (in Chinese only) can be found on icroute's website. The Chinese datasheet can be downloaded here. With the help of onlinedoctranslator, I made an English translation, which can be downloaded here.

Breakout board issues

Before you proceed to explore the LD3320, please be aware of possible PCB issues causing wrong signals to be fed to the IC and resulting in precious time wasted debugging the circuit. In my case, after getting the sample program to compile and run on my PIC microcontroller only to find out that it did not work, I spent almost a day checking various connections and initialization codes to no avail. I could easily have debugged till the end of time and still could not get it to work if I hadn't noticed by chance a 22.1184 MHz sine wave on the pin marked as WRB, raising suspicion that the PCB trace may have issues.

I decided to use a multimeter and cross-check the connections between the labelled pins on the connection headers and the actual pins on the IC while referring to the LD3320 pin configuration described in the datasheet:

This is the pin description printed on the connection header at the back of the board:

To my surprise, apart from the GND/VDD pins which are fortunately correctly labelled (otherwise I could have damaged the module by applying power in reverse polarity), the rest of the pin labels on the left and right columns of the left connection header are swapped! For example, RSTB should be INTB, CLK should be WRB and vice versa. This explained why I got a clock signal on the WRB pin as their labels are swapped! The correct labelling for these pins should be:

Therefore, before you apply power to the board, check to make sure that the pin labelling is correct - or at least check that the VDD and GND pins are correctly labelled. Also, your board may not have any issue or have a different issue than those described above.

Speech recognition

The only few examples I found for this IC are from coocox's LD3320 driver and some 8051 codes downloadable from here. By comparing the codes with the initialization protocol provided in the datasheet, the steps to use this module can be summarized below:

1. Reset the module by pulling the RST pin low, and then high for a short while.

2. Initialize the module for ASR (Automatic Speech Recognition) mode. In particular, set the input channel to be used for speech recognition.

3. Initialize the list of Chinese words to be recognized. For each Chinese word, send the Pinyin transliteration of the word (without tone marks) in ASCII (e.g. bei jing for 北京) and an associated code (a number between 1 and 255) to identify this word. The codes for the words in the list need not be continuous and multiple words can have the same identification code.

4. Look for an interrupt on the INTB pin, which will trigger when a voice has been detected on the input channel.

5. When the interrupt happens, instruct the LD3320 to perform speech recognition, which will analyse the detected voice for any patterns similar to the list of Chinese words programmed in step 3. If a match is found, the chip will return the identification code associated with the word.

6. After a speech recognition task is completed, go back to step 1 to be ready for another recognition task.

To specify which input channel will be used for speech recognition, use register 0x1C (ADC Switch Control). Write 0x0B for microphone input (MICP/MIN pins), 0x07 for stereo input (LINL/LINR pins) and 0x23 for mono input (MONO pins).

In my tests, as the microphone input channel cannot be used due to the PCB issues mentioned above, I used the stereo input channels with an ECM and a preamplifier circuit based on a single NPN transistor. The output of this circuit is then connected to the LINL/LINR audio input pins of the LD3320. Below is the diagram of the preamplifier:

To achieve the highest recognition quality possible, several registers of the LD3320 are used to adjust the sensitivity and selectivity of the recognition process:

- Register 0x32 (ADC Gain) can be set to values between 00 and 7Fh. The greater the value, the greater the input audio gain and the more sensitive the recognition. However, higher values may result in increased noises and mistaken identifications. Set to 10H-2FH for noisy environment. In other circumstances, set to between 40H-55H.

- Register 0xB3 (ASR Voice Activity Detection). If set to 0 (disable), all sounds detected on the input channel will be taken as voice and trigger the INTB interrupt. Otherwise, INTB will only be triggered when a voice is detected on the audio input channel whereas other static noises will be ignored. Set to a value between 1 and 80 to control the sensitivity of this detection - the lower the value, the higher the sensitivity. In general, the higher the SNR (signal-to-noise) ratio in the working environment, the higher the recommended value of this register. Default is 0x12.

- Register 0xB4 (ASR VAD Start) defines how long a continuous speech should be detected before it is recognized as voice. Set to value between 1 and 80 (10 to 800 milliseconds). Default is 0x0F (150ms).

- Register 0xB5 (ASR VAD Silence End) defines how long a silence period should be detected at the end of a speech segment before the speech is considered to have ended. Set to 20-200 (200-2000 ms). Default is 60 (600 ms).

- Register 0xB6 (ASR VAD Voice Max Length) defines the longest possible duration of a detected speech segment. Set to 5-200 (500ms-20sec). Default is 60 (6 seconds)

The LD3320 also supports playback of MP3 data received via SPI. Playback is done using the following steps:

1. Reset and initialize the LD3320 in MP3 mode.

2. Set the correct audio output channel for audio playback.

3. Send the first segment of the MP3 data to be played.

4. Check if the MP3 has finished playing. If so, stop playback.

5. If not, continue to send more MP3 data and go back to step 4.

Three types of audio output are supported: headphone (stereo), line out (stereo), or speaker (mono). The headphone and speaker channels are always enabled whereas the speaker channel must be enabled independently. Line out and headphone output volume can be adjusted by writing a value to bits 5-1 of registers 0x81 and 0x83 respectively, with 0x00 indicating maximum volume. Speaker output volume can be changed by writing to bits 5-2 of register 0x83, with 0x00 indicating maximum volume.

According to the datasheet, the speaker output line can support an 8-ohm speaker. However, in my tests, connecting an 8-ohm speaker to the speaker output will cause the module to stop playback unexpectedly, presumably due to high power consumption, although the sound quality through the speaker remains clear. The headphone and line out channels seem to be stable and deliver good quality audio.

I also tried to connect a PAM8403 audio amplifier to the line-out channel to achieve a stereo output using two 8-ohm speakers. At first, with the PAM8403 sharing the same power and ground lines with the LD3320, the same issue of unexpected playback termination persisted, even with the usage of decoupling capacitors. Suspecting the issue may be due to disturbance caused by the 8-ohm speaker sharing the same power lines, I used a different power supply for the PAM8403 and the LD3320 managed to play MP3 audio smoothly with no other issues.

Demo video

I made a video showing the module working with a PIC microcontroller and an ST7735 128x160 16-bit color LCD to display the speech recognition results. It shows the results of the module trying to recognize proper names in Chinese(bei jing北京, shang hai上海, hong kong香港, chong qing重庆, tian an men天安门) and other words such as a li ba ba. A single beep means that the speech is recognized while a double beep indicates unrecognized speech. Although the speech recognition quality highly depends on the input audio, volume level and other environmental conditions, overall the detection sensitivity and selectivity seems satisfactory as can be seen from the video.

The end of the video shows the stereo playback of an MP3 song stored on the SD card - using a PAM8403 amplifier whose output is fed into two 8-ohm speakers. Notwithstanding the background noises presumably due to effects of breadboard stray capacitance at high frequency (22.1184 MHz for this module), MP3 playback quality seems reasonably good and comparable to the VS1053 module.

The entire MPLAB X demo project for this module can be downloaded here.

See also

SYN6288 Chinese Speech Synthesis Module

Interfacing VS1053 audio encoder/decoder module with PIC using SPI